The Way In: Videogame as Composition and Composition as Videogame

By Stephan Moore & Scott Smallwood

Abstract

The acts of play involved in both creating and participating in music, sound installation, and videogames, have parallels that are yet to be fully explored or even defined. In our work, we are attempting to chart out this territory, noting important features of this hybrid creative landscape and work towards a practice of interactive audio gaming. What if composers adopted the conventions of videogame design and videogame play when creating a new piece? What if videogame designers adopted the conventions of musical expression, in composition and performance, when designing a new game? We created the small game/composition The Way In as an initial response to these questions, following a practice-as-research methodology. This work grew in part out of previous projects of The Audio Games Lab, a research group based at the University of Alberta that is interested in all the ways sound, music, and soundscape can function within the medium of games, and vice-versa. It also grew out of our longstanding collaborative artistic project Evidence, and a recent multichannel sound installation we were beginning to work on when the COVID-19 lockdowns occurred. In this paper, we describe the artistic processes that led us to rethink our approach, given the new circumstances, and to create this work as an intertwining of musical and ludic processes. Using this example as an entry point, we uncover what we see as the new field of creative expression that opens up at this intersection, acknowledging notable precursors to this work, and suggesting directions for further fruitful inquiry.

Keywords: videogame, generative art, music, game development, music composition, ludomusicology

Biography

Stephan Moore is a sound artist, designer, composer, improviser, coder, teacher, and curator based in Chicago. His creative work manifests as electronic studio compositions, improvisational outbursts, sound installations, scores for collaborative performances, algorithmic compositions, interactive art, and sound designs for unusual circumstances. He is the curator of sound art for the Caramoor Center for Music and the Arts, organizing annual exhibitions since 2014. He is also the president of Isobel Audio LLC, which builds and sells his Hemisphere loudspeakers. He was the music coordinator and touring sound engineer of the Merce Cunningham Dance Company (2004-10), and has worked with Pauline Oliveros, Anthony McCall, and Animal Collective, among many others. He is an associate professor of instruction in the Sound Arts and Industries program at Northwestern University. website

Scott Smallwood is a sound artist, composer, and performer who creates works inspired by discovered textures and forms, through a practice of listening, field recording, and improvisation. In addition to composing works for ensembles and electronics, he designs experimental instruments and software, as well as sound installations and audio games, often for site-specific scenarios. Much of his recent work is often concerned with the soundscapes of climate change, and the dichotomy between ecstatic and luxuriating states of noise and the precious commodity of natural acoustical environments and quiet spaces. He performs as one-half of the laptop/electronic duo Evidence (with Stephan Moore) and teaches as an associate professor of composition at the University of Alberta, where he also serves as the director of the Sound Studies Institute. website

Relevant projects: Evidence, Audio Games Lab.

Music Tending Towards Videogames: Characteristics of Generative Music Systems

In this paper we will explore the intersection of two realms of artistic expression, generative music and videogames. As composers who emerged from standard Western conservatory educations and then studied and practiced within the traditions of 20^th^ Century avant-garde music, we have seriously engaged with the performance of improvised music and the creation of sound installation artworks. At the same time, we have been lifelong videogame players, and have eagerly followed along as that field has evolved into its present state of expressive diversity and maturity. As the ubiquity and accessibility of tools for developing videogames increased, we naturally began experimenting with creating hybrid works, drawing connections between these realms. This experimentation has shaped our practice and revealed new possibilities for creative exploration. Here, we will share some observations and experiences drawn from this expansion of our practice, tracing precedents for the connecting of these fields, and speculating about further hybrid forms that may arise from their combination.

The term “generative music” denotes music that results from the execution of a defined system of rules, and which usually has the potential to change upon each realization [Eno 1996]. This distinguishes it from the vast majority of what is normally considered to be music, e.g. written scores or audio recordings that are intended to be played back identically, time after time. We see generative music as a broad category of musical creation, encompassing several musical forms that saw their formation in the latter half of the 20^th^ century, including structured (or rules-based) improvisation, interactive music, and sound installation art.

One representative thread of generative music creation influential to our own process is found in the hybrid forms of composition/improvisation that grew out of Western avant-garde musical practice after 1950, where performers were given a set of instructions, expressed via text, modified traditional musical notation, or newly invented forms of graphical notation, which performers interpret in order to create sound. There are many celebrated composers who worked with creating such generative systems, including Anthony Braxton, Pauline Oliveros, Christian Wolff, Annea Lockwood, Butch Morris, Earle Brown, and Cornelius Cardew, among many others. For example, the 1964 composition In C, by Terry Riley, supplies melodic material and a rhythmic structure to an unspecified group of musicians, but allows them the agency to determine the speed with which they will progress through the piece, resulting in a heterophony that is excitingly different from performance to performance, while also being sufficiently similar to other performances for the piece to retain its identity [Parviainen 2017]. John Zorn’s Cobra, completed in 1984 as one of a series of “game pieces,” lays out a detailed set of rules outlining the interactions between an ensemble of improvising musicians and a “prompter” who coordinates the group’s activities [Brackett 2010]. Even John Cage, who famously expressed his disinterest in improvisation, allowed performers to make constrained spontaneous decisions in his later works, through mechanisms such as the “time window notation” in compositions like Four^3^ [Haskins 2017].

Computer music systems that can make decisions on the fly, based on random number generation, data generated by other systems, or inputs provided by performers or participants, have broadened the scope of possibilities available to generative music. Such systems often blur the line between instrument and composition, such as Laurie Spiegel’s Music Mouse (a project first created in 1985 and iterated numerous times since) or Brian Eno and Peter Chilver’s Bloom (a 2008 smartphone app). These examples combine input from a human player with a system that behaves semi-reliably based on randomness, negotiating a sort of collaboration relationship, perhaps extending and elaborating upon the player’s creativity, or, at some settings, generating music without any live input at all. Systems like Alvin Lucier’s Music for Solo Performer (1965) or Onyx Ashanti’s Sonocyb (developed 2013 to the present) use electroencephalogram readings (brainwaves), heart beats, and other biometric data to drive generative musical systems. Nick Didkovsky’s 2002 composition Zero Waste listens to a musician’s performance and uses it to dynamically generate musical notation on a screen that the performer then sight-reads, forming an ever-evolving feedback loop mediated through the technology. Countless examples exist of compositions driven by generative musical systems to varying degrees, deployed in as many contexts as one may encounter sensors and computing machines today [Didkovsky 2003].

Such systems are often at the heart of sound installation artworks, which frequently bring the environment into central consideration. For some installations, the spatial situation of sounds within an environment induces an interactive system of its own. Max Neuhaus’s 1977 installation Times Square, which is still in operation today, features a sustained chord that emanates from a ventilation grill in the center of Manhattan’s Times Square. This piece emphasizes the interaction of this chord with all of the ceaseless surrounding urban noise and activity, generating an endless dialogue that has now continued for over 40 years. Listeners can travel within the boundaries of Janet Cardiff and George Bures Miller’s Forty-Voice Motet (2001) to create their own experience of a “fixed media” choral work from the 16^th^ Century. Spem in Alium, a composition by Thomas Tallis, is played back over 40 speakers, one for each vocalist in the a capella choir. The speakers are arranged into eight groups of five, by choir, and placed in a large oval, allowing for the intimate experience of a solitary voice, or a focus on an single five-member choir, or the perception of the entire ensemble sounding together, depending on where each listener positions themselves. In each of these works, the interactive potentials arise from the space in which the sounds are encountered, the placement of the loudspeakers, and the audience’s freedom to move within the space, exploring different options.

Many sound installations actively rely on the participation of visitors, while still others interpret data, actions, and incidents of a real or randomly-generated nature. Wolfgang Buttress’s 2015 installation The Hive interprets the vibrations of a beehive through a large spatial array of lights and speakers. Many of the works created as part of Kaffe Matthews’ Music for Bodies project, such as Sonic Bed Marfa (2008), involve an “infinite-length” generative composition, orchestrated by software, and performed through an array of speakers and subwoofers that transmit vibrations directly into the listener’s entire body [Matthews 2008]. Suzanne Thorpe’s 2014 installation Listening is as Listening Does uses speakers and microphones to “echolocate” the positions of people and objects within a large, open courtyard, much as a bat uses bursts of ultrasonic frequencies to locate obstacles and insects. The information gathered from these echolocations is used to determine the next sounds to be produced [Thorpe 2014].

The characteristics embraced by these generative musical practices — performer agency within a set of rules, interactivity within a responsive technological framework, and the introduction of space and exploration as an element of musical expression — comprise areas of contemporary musical activity that, when taken together, form a significant overlap with contemporary work in the field of videogames. In the next section, we will look at examples of how these ideas exist in parallel in videogames and where resonance between these art forms already exists.

Videogames as a Venue for Generative Music, Generative Music as a Ludic System

For the world of interactive media, a critical moment has arrived. In the past decade, we have seen tremendous advancements in the technologies capable of delivering interactive content for devices as diverse as VR headsets, gaming systems, PCs, and smartphones. We have also seen the content of this media mature and grow more complex and nuanced, as videogames, in particular, have become intriguing sites for experimentation and artistic engagement. And now, during this time of crisis, as COVID-19 ravages the globe, it seems that games have become more important than ever, with some reports appearing to show games are eclipsing other forms of entertainment, including movies and reading, in addition to their increased usage for the purposes of education and community activities [Sharma 2020; Monahan 2021]. There is little question that videogames have come far from their beginnings, and there have been many declarations that videogames have arrived as a serious art form in their own right [Smuts 2005; Parker 2013; Deardorff 2015; Kerr 2017].

Videogames can be conceptualized as another genre of generative system for producing and delivering multimedia and multi-modal experiences, different on each realization. The content of videogames is not only audiovisual, but also kinaesthetic and, increasingly, haptic. In each game, a set of rules is established and then navigated by one or more players, whose actions are in dialogue with the system, responding to events, and provoking further events based on those actions. In a traditional videogame, the player’s performance is evaluated by a score that accrues, and progression through the game is measured by an advancement through checkpoints or levels, with each successive progression resulting in an increase in difficulty. However, without these characteristics of challenge, evaluation, achievement and progression, many games might resemble the sorts of generative systems we discussed in the previous section.

Sonic activity within games is often separated into layers, providing for the simultaneous playback of sounds confirming player actions, sounds alerting the player of actions within the game, environmental sound design elements, soundtrack elements, and other sonic functions that may be required to complete the auditory universe of the game. Because these games begin without a definite sense of how play will proceed, their audio media elements must be designed with non-linear execution in mind. Each layer should be modular and interruptible, dynamically constructed with enough flexibility to allow for any contingency within the parameters of the game. Prominent videogame sound designer Martin Stig Andersen draws a connection between this characteristic of videogame sound and other generative musical systems:

My entry into interactive media primarily came from working with sound installations … where everything could happen in the present, while people were experiencing it. That became a logical way into game development. It’s always a challenge when you have to do something for the first time, but the idea of the non-linear was in no way new for me, so I already had a lot of strategies [Andersen 2019].

Videogame composers and sound designers are already familiar with the concept of non-linearity in composition, but usually their sounds are used to support the narrative: confirming actions, reporting on offscreen events, and establishing mood [Collins 2007]. What if a game is instead conceived with sound as its main organizing feature, consigning all of the other elements of the game to a supporting role? This question was at the heart of a 2017 exhibition curated by Stephan Moore and Chaz Evans at the Video Game Art Gallery in Chicago. Called “The Ears Have Walls: A Survey of Sound Games,” the exhibition featured over a dozen games where sound plays a central role in the gameplay. Some games were designed to support a larger musical structure, such as Rob Lach’s POP: Methodology Experiment One (2012), where a series of mini-games accompany an album of pre-existing music tracks, or David Kanaga’s Oikospiel Book 1 (2017), which ambitiously borrows the structure of a five-act opera, full of generative compositions with recurring themes. Other games achieve audio-centrism by eliminating the visual elements completely, such as in BlindSide (2012), an “audio-only adventure game” by Aaron Rasmussen and Michael T. Astolfi, or Dark Room Sex Game (2008), “an award-winning multiplayer, erotic rhythm game without any visuals, played only by audio and haptic cues” created by the Copenhagen Game Collective in 2008. These examples have been an inspiration to us in thinking about how the videogame can be adopted for the purposes of creating sound-centric artworks [Evans and Moore 2017].

Some inventive videogames feature interactive listening puzzles and quasi-instrumental performative exploration as a central activity. Many games by the Czech studio Amanita Design, such as Samorost 3 (2016), involve interactive scenes that must be manipulated almost like musical instruments in order for the player to progress. Fract OSC (2014) by Phosfiend Systems extends this idea by turning the entire game into a huge modular synthesizer. The theme of each puzzle mirrors the logic and parametric control specific to a portion of a standard synthesizer system. As the player unlocks successive areas, a large synthesizer at the game’s central hub location is incrementally activated, such that when the game is completed, the player can then play the finished synthesizer as a surprisingly sophisticated instrument. Fernando Ramallo and David Kanaga’s Panoramical (2015) dispenses with all but the barest references to game mechanics, offering the player a dozen scenes for exploratory audiovisual improvisation through a sophisticated 18-parameter interface, without any particular goal other than the player’s own sensory overstimulation.

One exciting subset of sound games re-imagines the videogame as a delivery medium for the release of an album of music. Alongside POP: Methodology Experiment One and Oikospiel Book 1, mentioned above, sits the notable collaboration between recording artist Björk and media artist Scott Snibbe, Biophilia (2011). Each track of the album Biophilia is explored, extended, and sometimes rendered non-linear through a separate simple game, each of which serve to underscore the ecological message of the album. Another recent example of a game/album hybrid is Sayonara Wild Hearts (2019), a collaboration between pop musicians Daniel Olsén, Jonathan Eng, and Linnea Olsson and videogame studio Simogo, where a series of pop music tracks shape the fast-paced action and overall structure of the game. The game openly invites the player to replay each level, thereby developing an intimate familiarity with the music in order to improve their level of achievement.

In this survey of videogame sound innovation, we acknowledge that our list is biased towards works that fall within the territory that we are interested in exploring in our own creative work, and that many significant areas have been left unmentioned, including so-called “rhythm games.” Popular examples of these are Harmonix’s Guitar Hero (2005) and Rock Band (2007) or Konami’s Dance Dance Revolution (1998). Such games often make use of novel interfaces to engage the player in sound-synchronized actions. We have also not discussed the increasing popularity and diversity of videogame soundtracks, which are now rightfully seen as significant musical achievements, and are frequently made available to consumers in the same way as feature film soundtracks. While these developments emphasize the importance of sound within the field of videogames, they are less aligned with our own research questions.

As some musical creations have tended towards game-like structures and generative processes, and some videogame development has moved in the direction of audio-centric game design, we have begun to see the potential of the expressive possibilities at the intersection of these trajectories. We have become excited by what experimentation in this area might yield, and have begun a course of creative practice-as-research to mount an ongoing exploration. We see our work as unfolding in parallel with the field of Ludomusicology, a vital academic enterprise that, for ten years now, has championed the serious study of sound in videogames through an organization (the Society for the Study of Sound and Music in Games, annual conferences, and, more recently, the Journal of Sound and Music in Games. What follows is a recounting of our own paths towards the current moment of our work, the state of our practice today, and speculative projections into the future possibilities of this work.

The Road to The Way In

The acts of play involved both creating and participating in music, sound installation, and videogames, have parallels that are yet to be fully explored or even defined. In our work, we are attempting to chart out this territory, noting important features of this hybrid creative landscape and work towards a practice of interactive audio gaming. As mentioned above, our work over the past three decades has included both linear and non-linear compositional work, including interactive installations and improvised performances, often in collaboration with artists in other media (dance, video, etc). In the case of much of the installation work, one piece in particular led us to begin thinking about the context of gaming, initially in terms of the exploratory nature that some games offer: The Karmic Teller Machine, or the KTM.

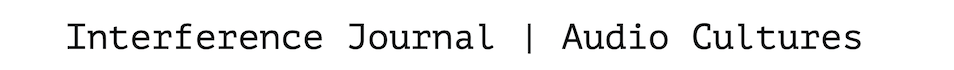

The KTM, in its original incarnation, consisted of a five-sided pentagonal booth shaped like a truncated cone, designed to accommodate one person at a time for a semi-private reading of their karmic balance. It lived on the frontage of our theme camp, the First Transdimensional Bank and Church (FTB&C). We enjoyed explaining to passersby that they already have an account, and although withdrawals and deposits are not possible, they are welcome to check their balance by entering and pressing the glowing blue button. Upon doing this, the KTM yielded a 30-second sonic realization of their karmic balance (which was, in actuality, just randomized from a group of several hundred sounds we created over many years). At an event like Burning Man, where participation is a primary mode of being, and where people wander and encounter artworks, performances, spectacles, and other strange experiences, the KTM became a kind of city-wide utility that hundreds of people visited and revisited throughout the week. Since it was on the playa for several years, it developed a history and functioned as a staple experience in the neighborhood in which it lived. In this way, the piece became part of participants’ personal narratives, within the context of the “exploration game” that is Burning Man [Moore et al. 2011].

\ \

Figure 1: The KTM at Burning Man 2016 (left). The KTM online (right). Photos by Scott Smallwood.

\ \

After several years of success with the KTM, we eventually came around to the idea of creating an online version that could exist independently from the event. However, while the piece exists as another kind of experience, it doesn’t have nearly the same impact or appeal, being divorced from the context of Burning Man. The success of the original KTM as a site-specific piece that connected to the Burning Man ethos, as well as to our theme camp, and the various personal narratives of those who encounter it, either through spontaneous discovery or intentional usage, led us to realize that the overarching context of Burning Man was critical to its effectiveness. Indeed, both the original KTM and the online version are “one button” affairs, in terms of interaction, but the act of using and experiencing it changes completely when it is part of a larger “world” and narrative.

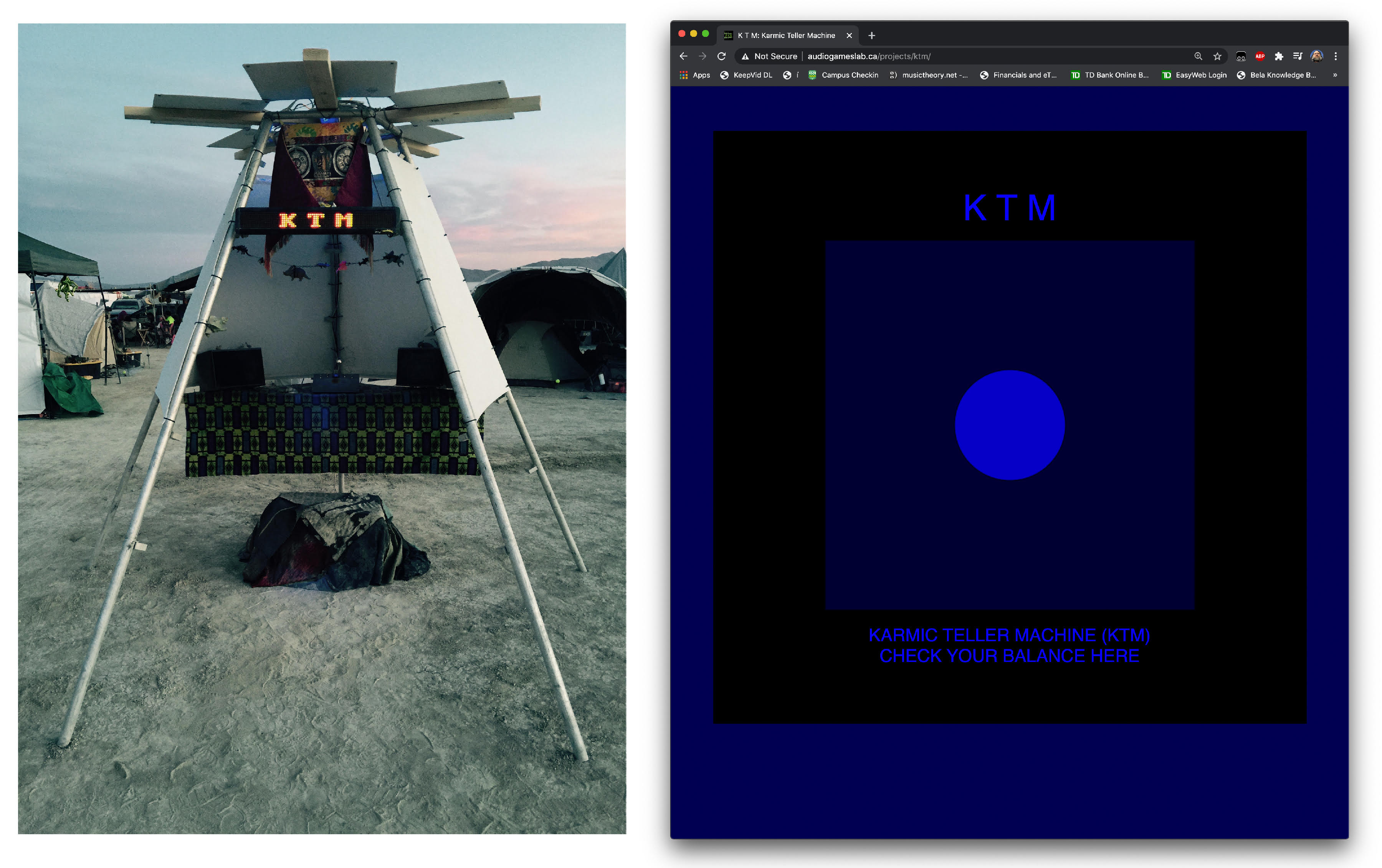

Another example of the latent gamification of an interactive installation grew out of a 2013 theatre work called Glory Road, by the New York City-based theater company the Nerve Tank, in which a six-foot steampunk-ish sphere made of steel was created to represent a Sisyphean boulder. During the performance, an actor playing Sisyphus would manipulate this sphere across a red carpet, rolling it into the proper orientation within a short window of time over and over again, or else the boulder would, figuratively, roll back down the hill, forcing Sisyphus to start over. The orientation of the sphere was detected by an accelerometer sensor embedded in the center of the sphere, and the state of the sphere was communicated via sound from an array of twelve built-in speakers. This system was designed by Moore, who served as the resident composer for The Nerve Tank at the time.

\ \

Figure 2: The sphere in Glory Road (left), and as Sisyphus 2.0 with director/welder Melanie Armer. Photos by Stephan Moore (2013, 2015).

\ \

After the run of the public theater production, the sphere was reprogrammed to run a deceptively simple game system — the orientation parameters of the sphere, measured in degrees of “pitch” and “roll,” were mapped onto the speed and pitch of two pulsing tones. Renamed Sisyphus 2.0, the sphere has taken on a new life as a public art piece. Audiences are invited to roll the sphere collaboratively, seeking the point where the pitch and tempo of the two pulsing tones would match. When this point is found, the sphere explodes into a short, celebratory song, and then resets itself with the orientation-point of pitch-matching moved to a new position. In recent months, development has begun on Sisyphus 3.0, which will be an entirely virtual version of this game.

While these two works morphed and changed, our relationship to games as game players changed too. New discoveries of profoundly novel and experimental audio experiences within game design space led us to consider the videogame genre, which previously had seemed closed off to all but those who had access to the complex production pipeline typified by the traditional game studio structures. With the advent of powerful game design tools (such as Unity, Unreal, etc.) that were free to use and learn, it became possible to think about simply adding another tool to the toolkit, particularly since we both already had significant coding experience through platforms such as Max, ChucK, Pure Data, and other sound and media languages.

In 2015, Smallwood established the Audio Games Lab at the University of Alberta, which has since functioned as a research incubator and collaboratory for exploring the nexus between games, sound art, and composition. It exists as both a physical space at the university that includes a maker lab and a multichannel studio, and as a publisher/studio/platform for producing short game concepts and ancillary works that emerge from experimentation, and deploying them either as full game downloads, or presentations/installations in galleries, festivals, and other events.

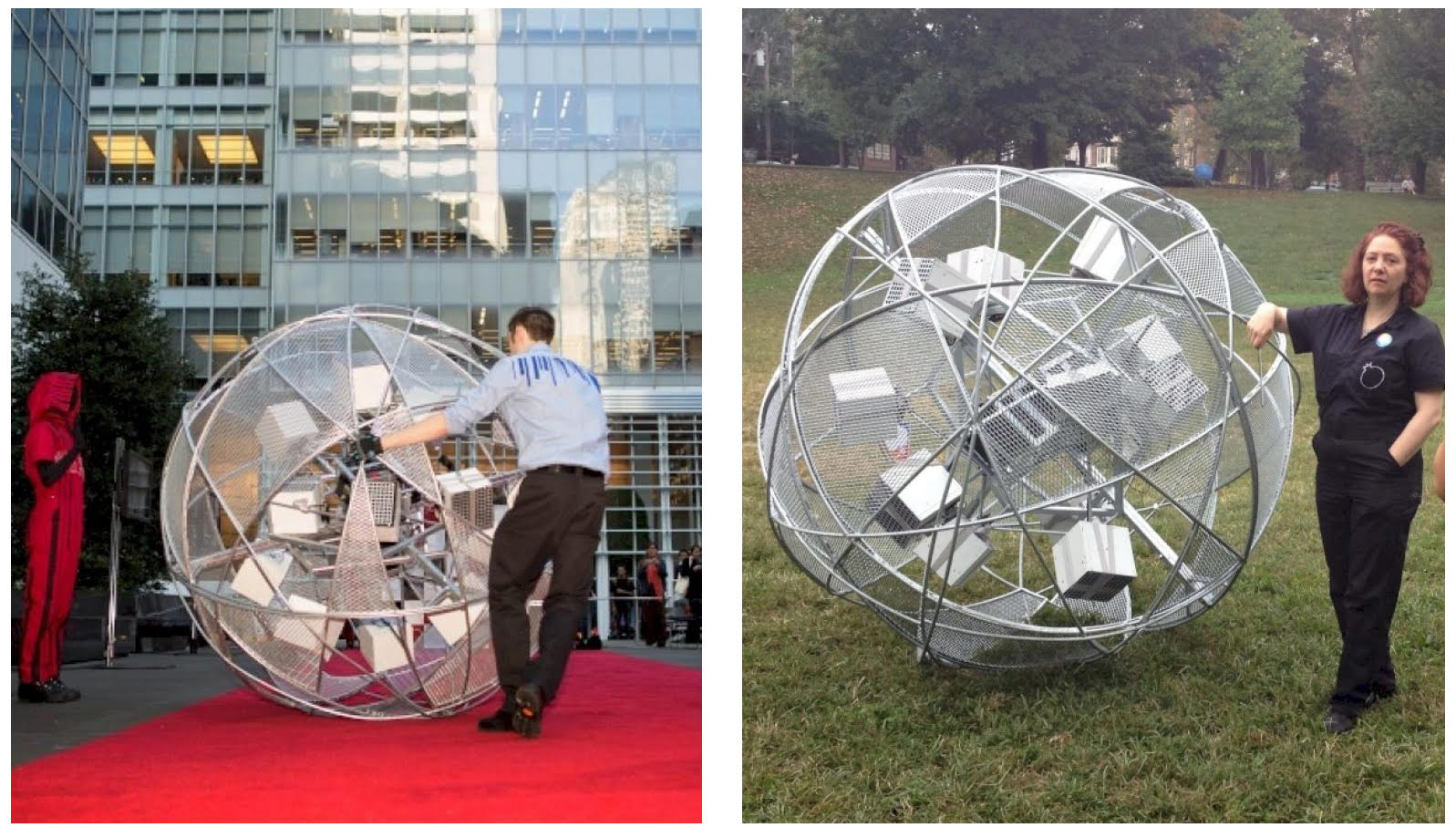

One of the first projects, Sync or Swim (2012-15), illustrates one particular category of intrigue: the idea of sonic puzzles discussed above, but thinking about them as compositional spaces. Like the KTM mentioned above, Sync or Swim was originally an installation piece, featuring a big knob, a button, and generative, repetitive sounds of syncopated beeping, which functioned like a kind of sonic combination lock, requiring the listener to manipulate the sounding texture in order to find the perfect sonic fit. In this case, the key was to listen for perfectly synchronized repetition. Eventually the piece developed into an app, and then into an idea for a repeatable mechanic within a larger game context.

Sync or Swim generated interesting questions about (1) the sound of the piece itself, independent of its function as a game; (2) how interacting with the piece changes it, and the players’ perception of it; and (3) how all of this changes entirely when the platform changes. Considering the game as a smartphone game, for example, as opposed to an interactive installation in a gallery, requires a different approach, since the user experience between those two things is totally different. While the piece has gone through a number of iterations, it also spawned a whole series of questions around sound puzzle mechanics as compositional systems, and what happens when you place several of them into a game context. These questions continue to be central ones addressed through the Audio Games Lab experiments.

\ \

Figure 3: Sync or Swim: 2012 installation version (left), 2016 app (right). Photos by Scott Smallwood.

\ \

Another work that emerged from the Audio Games Lab was Locus Sono (2016), which further explored the audio puzzle mechanic, this time by exploring the soundscape of a large open environment. In this exercise, the idea was to create auditory spaces where carefully listening revealed new information. Specifically, the game asked the player to explore the mostly empty space and listen for new sounds, until three distinct sounds overlap. This “sweet-spot” mechanic was iterated on through the creation of themed levels, creating the opportunity to think of the mechanic as a compositional exercise [Smallwood 2017].

This work led to a more ambitious project, The Lost Garden, a multiyear project that resulted in the creation of several new audio puzzle concepts, integrated into a more complex “escape game” scenario. Continuing the idea of puzzle mechanics as compositional systems, the game is a Myst-like exploration game, inviting the player to experiment through listening, eventually unlocking new areas that ultimately lead to the so-called lost garden, which is the central object of the game. The various puzzles include spatial listening situations similar to those in Locus Sono, rhythmic puzzles, and a variety of sound-matching puzzles, where the player is asked to select certain sounds given a specific grouping or contextual scenario. The game also has an underlying story, as games often do, told only through non-vocal sound recordings, hinting at a future Earth in which most natural spaces have disappeared due to catastrophic climate change and the worldwide spread of “urban crust,” reflecting Isaac Asimov’s planet Trantor [Asimov 1951]. The player, then, realizes that they are searching for that last natural space, the lost garden. In addition to the work created for the game itself, the concepts behind this work have influenced several other non-game projects in positive and surprising ways, proving how fruitful and fulfilling a game development pipeline can be for focusing musical composition.

\ \

Figure 4: Scenes from The Lost Garden (2021). Images by Scott Smallwood.

\ \

The Way In

One Lost Garden mechanic that we felt was worth further exploration was a kind of “sonic maze,” which was implemented in one level of the game. The idea was to traverse a maze through sound — somewhat similar to, for example, the footsteps puzzle in Jonathan Blow’s game The Witness (2016). While the small maze in The Lost Garden was interesting, we had hoped for an opportunity to expand on the idea by having the sound unfold compositionally based on the player’s traversal of the maze path.

In 2020, we began work on a new multichannel sound installation for the 2020 Sonorities Festival. However, its subsequent cancellation due to the global pandemic led us reconsider the project’s parameters, and perhaps use this as an opportunity to iterate on the sonic maze concept.

Initially, our plan was to create a multichannel installation for the Sonic Laboratory at the Sonic Arts Research Centre (SARC) at Queen’s University in Belfast, which was one of the programmed spaces of the 2020 Sonorities Festival. We planned to base the piece on some relatively new sound material we had generated at an artist residency at The Tank Center for Sonic Arts in Colorado during the summer of 2018. The piece we planned followed a workflow that we have engaged in a few times previously, where a generative multichannel installation piece is created first, and then a new (stereo) album is derived from the installation materials after the fact. Our previous albums Visuals (Contour Editions, 2013), and Go Where Light Is (Dead Definition, 2019) were created in this way.

Located in rural western Colorado, The Tank is a decommissioned 1940s-era steel-capped railroad water treatment tank, 60 feet in diameter and over 200 feet high, which has been turned into a performance and recording space. Inside, the sound echoes and reverberates “in circles like atoms in a supercollider. Twist(ing) away like a whirlpool, like a benign Charybdis. Expand(ing) and puls(ing) up and up and up.” [Zadra 2015, 2]. Indeed, the sound is so disorienting and massive that conversation in the space was difficult even if we were standing just a few feet apart.

\ \

Figure 5: The Tank. Outside of Rangely, Colorado. Photo by Scott Smallwood (2018).

\ \

During our brief residency there in August 2018, we arranged to have multiple microphones flown and spread throughout the space, and then we set up our equipment and several speakers, and improvised in the space for several hours, concluding with a performance open to the general public. All of this was recorded in multiple tracks to a Pro Tools session. Although most of the source material was generated by our laptop performance systems through speakers, we also worked with a live vocalist, Samantha Lightshade, who served as our primary audio engineer as well. Needless to say, the material we generated, using the Tank as a giant resonant processor, yielded extremely soupy and wildly complex sonic textures, captured from multiple point sources.

This material was extremely difficult to work with, and we sat on it for about a year before we saw the call for works for the Sonorities Festival. The opportunity to work with the SARC Sonic Laboratory seemed like a great facility for experimenting with these materials. We created an initial composition that we planned to finalize on-site, and then the COVID-19 pandemic hit, upending our plans not only for presenting the new track, but also for the entire workflow we had planned for creating a new album.

During the next few weeks, as all plans involving travel vanished, lockdown restrictions created a new incentive for working in studio-oriented ways, including making and playing games, and reconsidering the Tank-generated musical materials. The Tank’s strange acoustics and the physical sensation of feeling so disconnected from the sound is very disorienting. Its inside is so vast and dark, and sonically it feels almost infinite. Any crisp points or edges vanish, everything is smoothed out into endless swirling ribbons of sound. Localization of sound was simply impossible. One could get lost in the massive soup of it. We began to imagine what it would be like if there were a maze inside of The Tank, with an open ceiling.

Rapidly, the two ideas converged: the concept of a maze with a sounding pathway, and the design of a virtual space for exploring the disorienting acoustics of the Tank. In this newly-conceived game-world, the correct maze path “contains” the composition, which unfolds as the player progresses. If the player turns the wrong way, leaving the path, the music subsides. Could these recordings be good source materials for this sonic maze idea? How would the sound evolve as the player moves through the maze? And furthermore, how would the sounds contribute to the overall soundscape of the space? When leaving the maze on the wrong path, would the player just hear silence, or would they hear the ambiance of the empty tank? These questions became challenges to work through, and the result was the prototype “level 0” of a potential sounding maze game, which we dubbed The Way In.

\ \

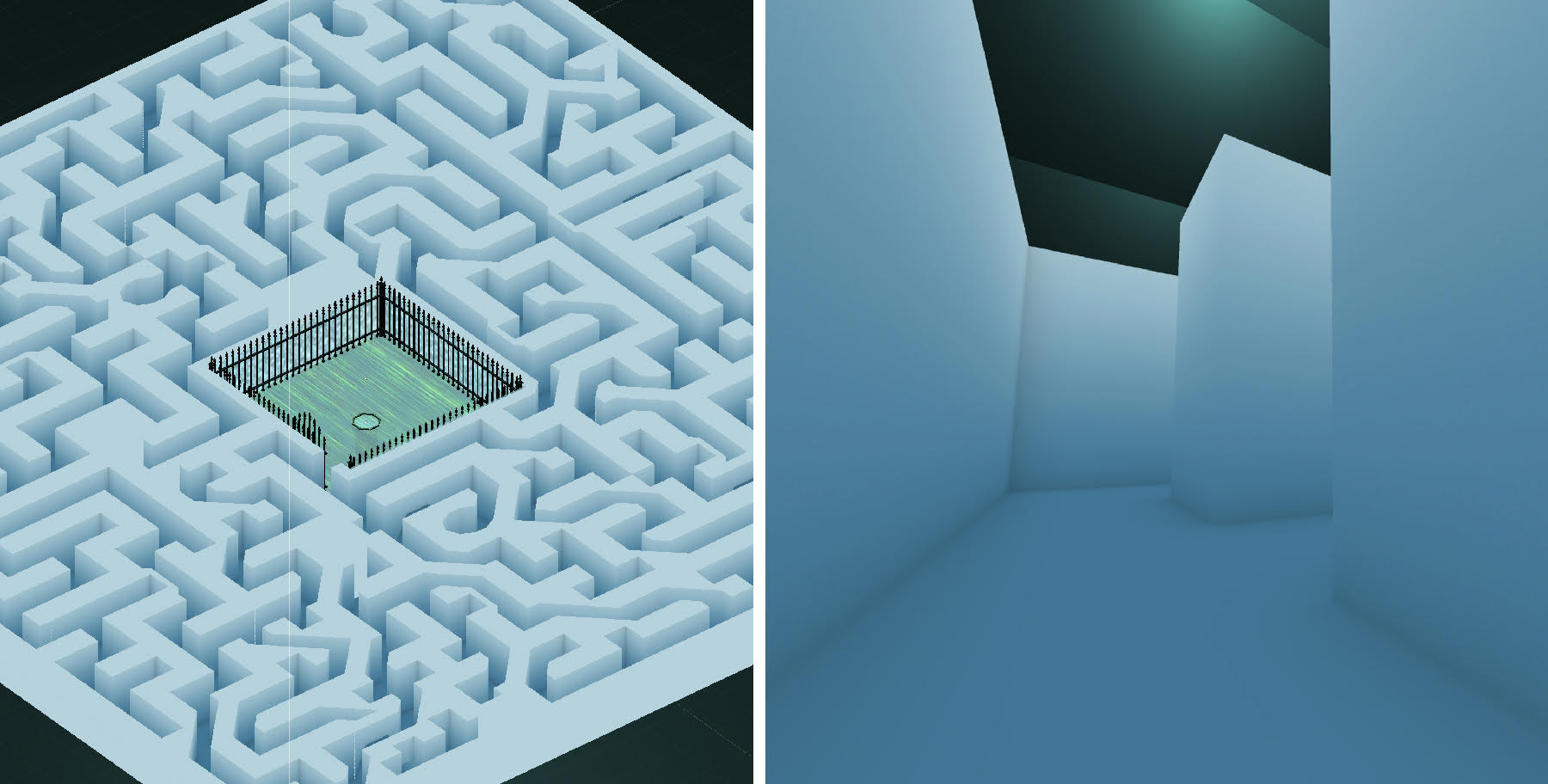

Figure 6. The Way In: top view (left), player view (right). Images by Scott Smallwood.

\ \

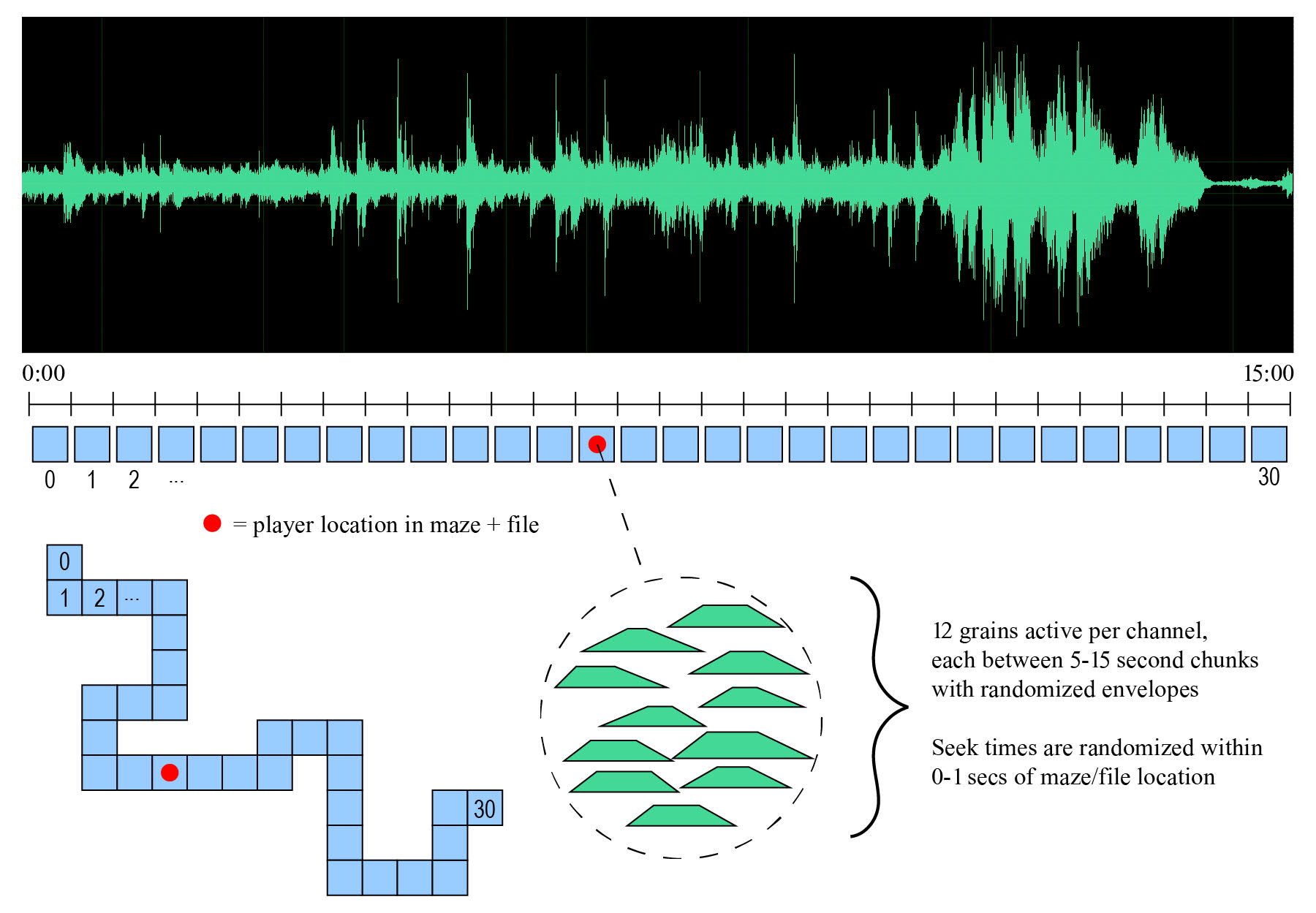

The underlying audio for The Way In is a 15-minute four-channel excerpt from one of our Tank recording sessions, each channel representing the audio captured by one of four different microphones. This material is subject to a granular process that works its way through the file over time, grabbing and playing back 10-15 second chunks (with multiple voices, overlapping each other) from within a window defined by the player’s position along the maze path (see Figure 7). As the player approaches the end of the path, they also approach the end of the 15-minute excerpt. Thus, as they play through the level, they traverse the musical excerpt quasi-linearly, dragging the window of the granular synthesis process along with them. The indistinct and ambient quality of the Tank-recorded sounds lends itself well to constant cross-fading. With experimentation, we landed upon a specific mix of voices, grain sizes, and process parameters which create an intriguing sequence of acoustical spaces to surround the player through the maze, ensuring that each part of the maze has a unique sonic color and quality of activity.

\ \

Figure 7: Long granulation process as it relates to maze location. This diagram shows only 30 segments; the actual number is much larger. Image by Scott Smallwood.

\ \

In The Way In, a fixed, linear sound recording underlies a similarly linear path through a maze. However, generative musical processes and the essential interactivity of gameplay complicate all of that linearity, ensuring that the sonic experience of the game is generated anew in real time, with endless variations that adhere to the conditions of the players current location, creating a perpetually moving and breathing soundscape. No two play-throughs of the level will be the same, with the player’s agency within the work being the most significant determining factor in creating variation and novelty. Yet, because of the fixed aspects of the work, there will always be a degree of recognizable similarity to this world — not unlike any two iterations of another game, or another musical composition.

The Future

At the moment, The Way In is only one maze, featuring one 4-channel, 15 minute segment from our Tank sessions — very much a work-in-progress and the beginning of an odyssey. Our next step involves iterating through a level design process, where each level is linked to a different excerpt drawn from our Tank recording sessions. As we create each level, we plan to bring in 3D-graphics designers to create architectural assets for the maze elements, giving each maze a unique feel. The mazes themselves will be created through customizable maze-generation code. We are imagining what possibilities different varieties of mazes or spaces might afford us, based on the sounds we are working with, and variations we might make to the basic mechanics of the game. We expect this to take us into the next year or so, emerging on the other side with a new album of generative music that is listened to by traversing mazes.

At the same time, we are considering what projects we might embark upon after The Way In, and what new tools might be valuable in developing the next project. In our previous work we have used Chunity, a plugin to the Unity game development engine that allows code written in ChucK, a real-time audio programming language, to be embedded. This has given us the ability to create generative sound in sophisticated ways, for example enabling us to create the granular synthesis engine for The Way In. Many other such plugins exist, with potential advantages to be explored. Audio middleware applications, such as FMOD and Wwise, similarly allow for more sophisticated approaches to the implementation of interactive audio, while ambisonic audio plugins, such as the Oculus Spatializer or Google Resonance, allow for a more nuanced approach to sound localization. With the advent of new Virtual Reality platforms, there is now a desire for increasingly realistic and immersive soundscapes, and significant research investment is being made by multiple developers, who are keeping their rapidly-evolving software free or low-cost in a play to establish an early user base. A front-row seat to the latest audio technologies is therefore readily accessible to those who have already obtained some basic game development skills and audio know-how. We hope that this reality might increase the number of individuals working in this field, yielding increased innovation in the field of game audio.

We have also begun to conceive our next game, inspired by the experience of listening while traveling in the “deep playa” area of Burning Man, a wide-open space away from the residential areas of the city that is sparsely filled with interactive artworks. The 2020 virtual Burning Man event “The Multiverse” spurred us in this direction, providing a virtual-geographical proof of concept. Tentatively titled The Way Out, this game will trade confining mazes for vast, open areas studded with large sculptural objects, and the paths between these objects will be infused with sound. Instead of each path containing only its own composition, we imagine creating a kind of modular music where the intersections of paths form nodes through which a larger meta-composition can be navigated. Audio processing capabilities, collected through mini-games embedded in the sculptures, allow the player to participate in musical co-creation with the game. The process of creating this score will require developing a system of musical composition that does not yet exist, and which can only be fully experienced through gameplay.

To us, the potential of this hybrid creative space feels limitless, while at the same time nascent — it seems inevitable that the coming years will continue to bring new innovations, new technologies, and new methods, and new paradigms for creative expression through sound. We are all only now looking up to the sky and beginning to grasp how much there is to explore. It is our sense that we are standing at the edge of a new era in sonic expression.

Bibliography

Andersen, M. S. 2019. DEATH IN DESIGN: Martin Stig Andersen (INSIDE interview) [online]. Available: https://www.youtube.com/watch?v=OEzkYUwGXmA, accessed 13 January 2021.

Asimov, I. 1951. Foundation. New York: Gnome Press.

Blow, J. 2016. The Witness. Thekla Inc (multiple platforms).

Brackett, J. 2010. “Some Notes on John Zorn’s Cobra,” American Music, Vol. 28, No. 1 (Spring 2010).

Brinks, M. 2018. “Experimental Games Invigorate the Industry, So Why Don’t They See More Coverage?,” Forbes 28 August.

Collins, K. 2007. “An Introduction to the Participatory and Non-Linear Aspects of Video Games Audio,” in Hawkins, S. and J. Richardson, (eds.) Essays on Sound and Vision. Helsinki: Helsinki University Press.

Deardorff, N. 2015. “An Argument That Video Games Are, Indeed, High Art,” Forbes 13 October.

Didkovsky, N. 2003. Zero Waste, for Kathleen Supove. Available: http://www.punosmusic.com/pages/zerowaste/zerowaste_premiere_transcription.html, accessed 13 January 2021.

Eno, B. 1996. “Generative Music,” Motion Magazine 7 July.

Evans, C. and S. Moore. 2017. “The Ears Have Walls: A Survey of Sound Games” [online]. Available: https://www.videogameartgallery.com/events/2017/10/10/the-ears-have-walls-a-survey-of-sound-games, accessed 11 January 2021.

Gottschalk, J. 2016. Experimental Music Since 1970. London: Bloomsbury.

Haskins, R. 2017. Anarchism and the Everyday: John Cage’s Number Pieces [online]. Available: https://robhaskins.net/2017/05/25/anarchism-and-the-everyday-john-cages-number-pieces/, accessed: 13 January 2021.

Kerr, A. 2016. Global Games: Production, Circulation, and Policy in the Networked Era. London: Routledge.

Marshall, B. 2008. “Brian Eno’s Bloom: new album or ambient joke?,”” The Guardian 14 October.

Matthews, K. 2008. Sonic Bed Marfa. Available: https://musicforbodies.net/sonic-bed/marfa/, accessed: 12 January 2021.

Monahan, S. 2021. “Video Games Have Replaced Music as the Most Important Aspect of Youth Culture,” The Guardian 11 January.

Moore, S., D. Ogawa and S. Smallwood. 2011. “Metafiscal Services in the Middle of Nowhere,” Radical Aesthetics and Politics: Intersections in Music, Art, and Critical Social Theory, Hunter College, CUNY.

Parker, L. 2013. “A Journey to Make Video Games into Art.” The New Yorker 2 August.

Parviainen, T. 2017. Terry Riley’s “In C” – A Journey Through a Musical Possibility Space. Available: https://teropa.info/blog/2017/01/23/terry-rileys-in-c.html, accessed 13 January 2021.

Sharma, R. 2020. “People Aren’t Reading or Watching Movies. They’re Gaming,” New York Times 15 August.

Smallwood, S. 2017. “Locus Sono: A Listening Game for NIME,” in Proceedings of the 2017 New Interfaces for Musical Expression Conference, Copenhagen, Denmark, 15-18 May.

Smuts, A. 2005. “Are Video Games Art?,” Contemporary Aesthetics. 2 November.

Thorpe, S. 2014. Listening is as Listening Does. Available: https://www.caramoor.org/music/sonic-innovations/past-exhibitions/in-the-garden-of-sonic-delights/listening-is-as-listening-does/, accessed 10 January 2021.

Zadra, H. 2015. “From Steam Age to World Music Stage: The History of Rangely’s ‘Tank’,” Waving Hands Review 7.7.